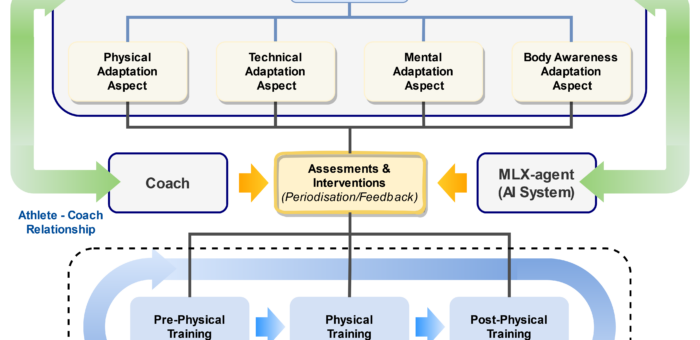

Personalizing running training with immersive technologies using a multimodal framework

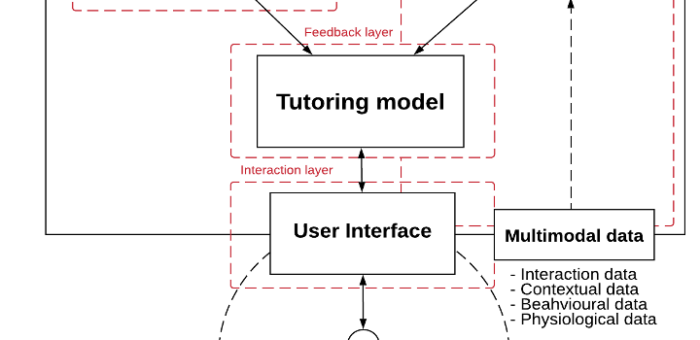

To improve performance and prevent injuries, running training needs proper personalized supervision and planning. This study examines the factors that influence running training programs, and the benefits and challenges of personalized plans. It also investigates how multimodal, immersive and artificial intelligence (AI) technologies can improve personalized training. We did an exploratory sequential mixed research with running coaches. We analyzed the data and found relevant factors of the training process. We recognized four key aspects for running training: physical, technical, mental and body awareness. We used these aspects to create a framework that proposes multimodal, immersive and AI technologies to help personalized running training. It also lets coaches guide their athletes on each aspect personally. The framework aims to personalize the training by showing how coaches and multimodal learning experience agents…