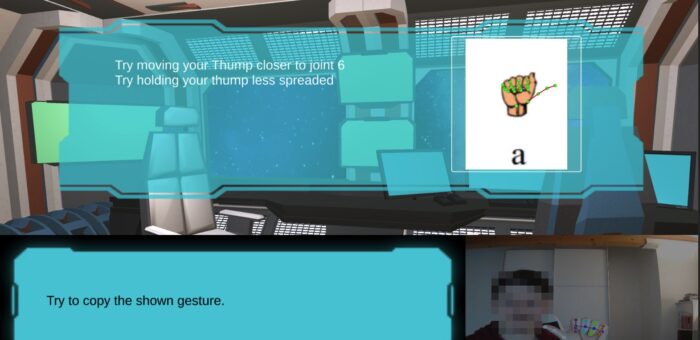

New pub: Using Accessible Motion Capture in Educational Games for Sign Language Learning

A new publication will be presented at the EC-TEL conference 2023 in Aveiro, Portugal. "Using Accessible Motion Capture in Educational Games for Sign Language Learning" https://link.springer.com/chapter/10.1007/978-3-031-42682-7_74 Abstract Various studies show that multimodal interaction technologies, especially motion capture in educational environments, can significantly improve and support educational purposes such as language learning. In this paper, we introduce a prototype that implements finger tracking and teaches the user different letters of the German fingerspelling alphabet. Since most options for tracking a user’s movements rely on hardware that is not commonly available, a particular focus is laid on the opportunities of new technologies based on computer vision. These achieve accurate tracking with consumer webcams. In this study, the motion capture is based on Google MediaPipe. An evaluation based on user feedback shows that…