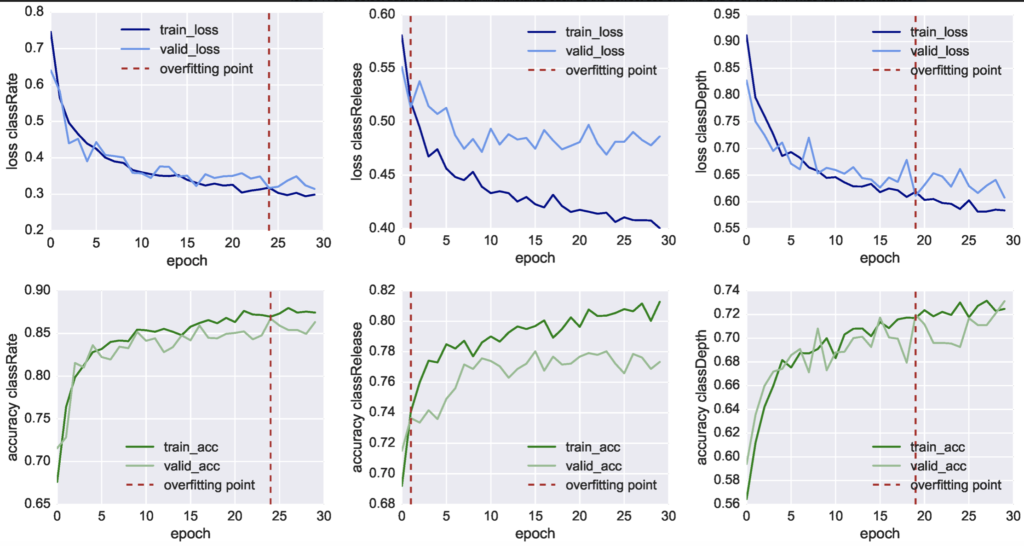

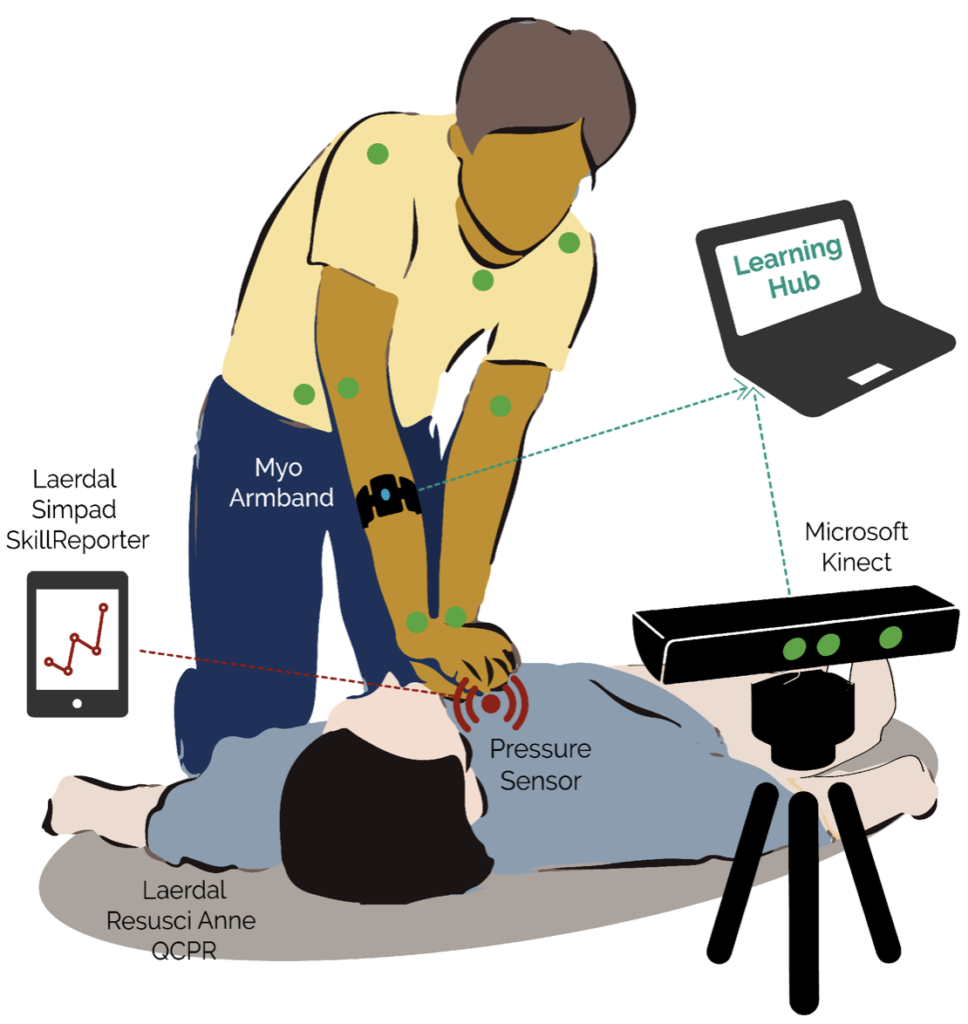

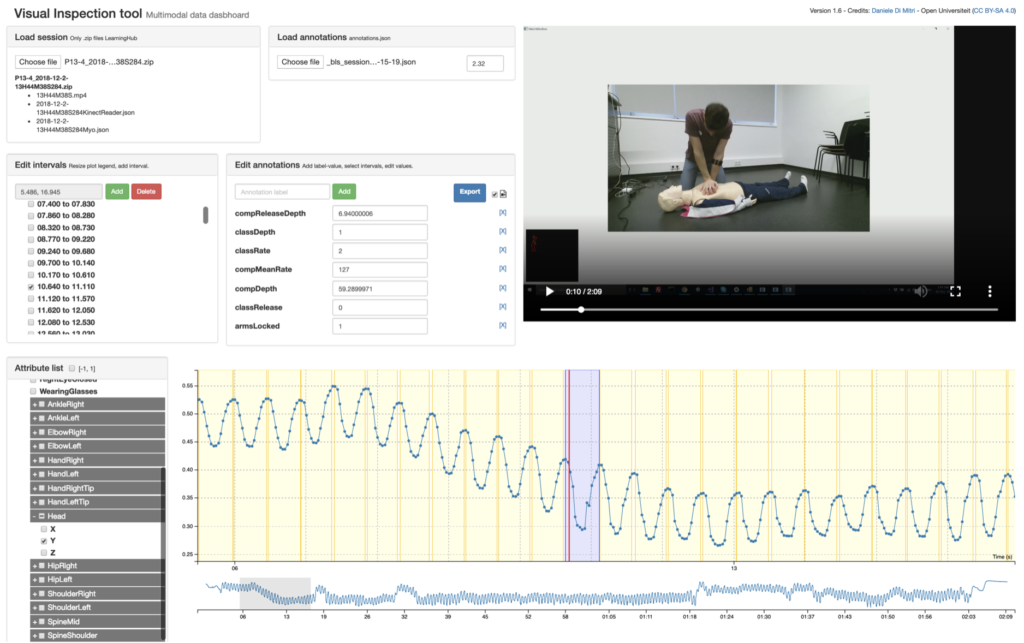

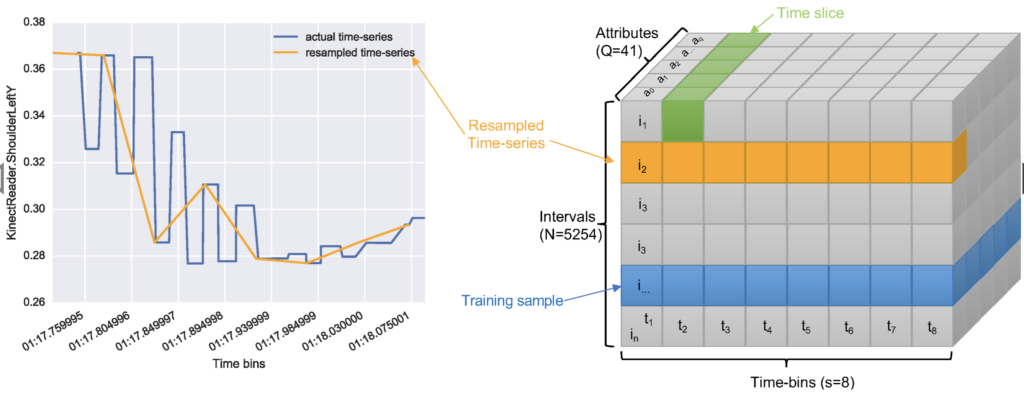

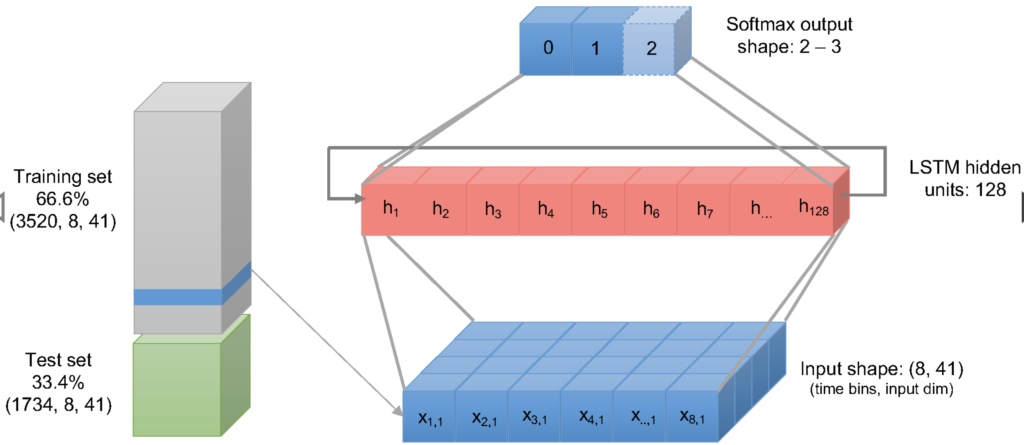

This study investigated to what extent multimodal data can be used to detect mistakes during Cardiopulmonary Resuscitation (CPR) training. We complemented the Laerdal QCPR ResusciAnne manikin with the Multimodal Tutor for CPR, a multi-sensor system consisting of a Microsoft Kinect for tracking body position and a Myo armband for collecting electromyogram information. We collected multimodal data from 11 medical students, each of them performing two sessions of two-minute chest compressions (CCs). We gathered in total 5254 CCs that were all labelled according to five performance indicators, corresponding to common CPR training mistakes. Three out of five indicators, CC rate, CC depth and CC release, were assessed automatically by the ReusciAnne manikin. The remaining two, related to arms and body position, were annotated manually by the research team. We trained five neural networks for classifying each of the five indicators. The results of the experiment show that multimodal data can provide accurate mistake detection as compared to the ResusciAnne manikin baseline. We also show that the Multimodal Tutor for CPR can detect additional CPR training mistakes such as the correct use of arms and body weight. Thus far, these mistakes were identified only by human instructors. Finally, to investigate user feedback in the future implementations of the Multimodal Tutor for CPR, we conducted a questionnaire to collect valuable feedback aspects of CPR training.

Di Mitri, D.; Schneider, J.; Specht, M.; Drachsler, H. (2019) Detecting Mistakes in CPR Training with Multimodal Data and Neural Networks. Sensors2019, 17, 3099.